As you read this, a seismic shift is underway in the tech industry. The advent of artificial intelligence (AI) is not just transforming our interactions with technology, it’s fundamentally reshaping where value is derived. This migration of value is revolutionizing the essence of user experience and redefining our understanding of the “interface layer.”

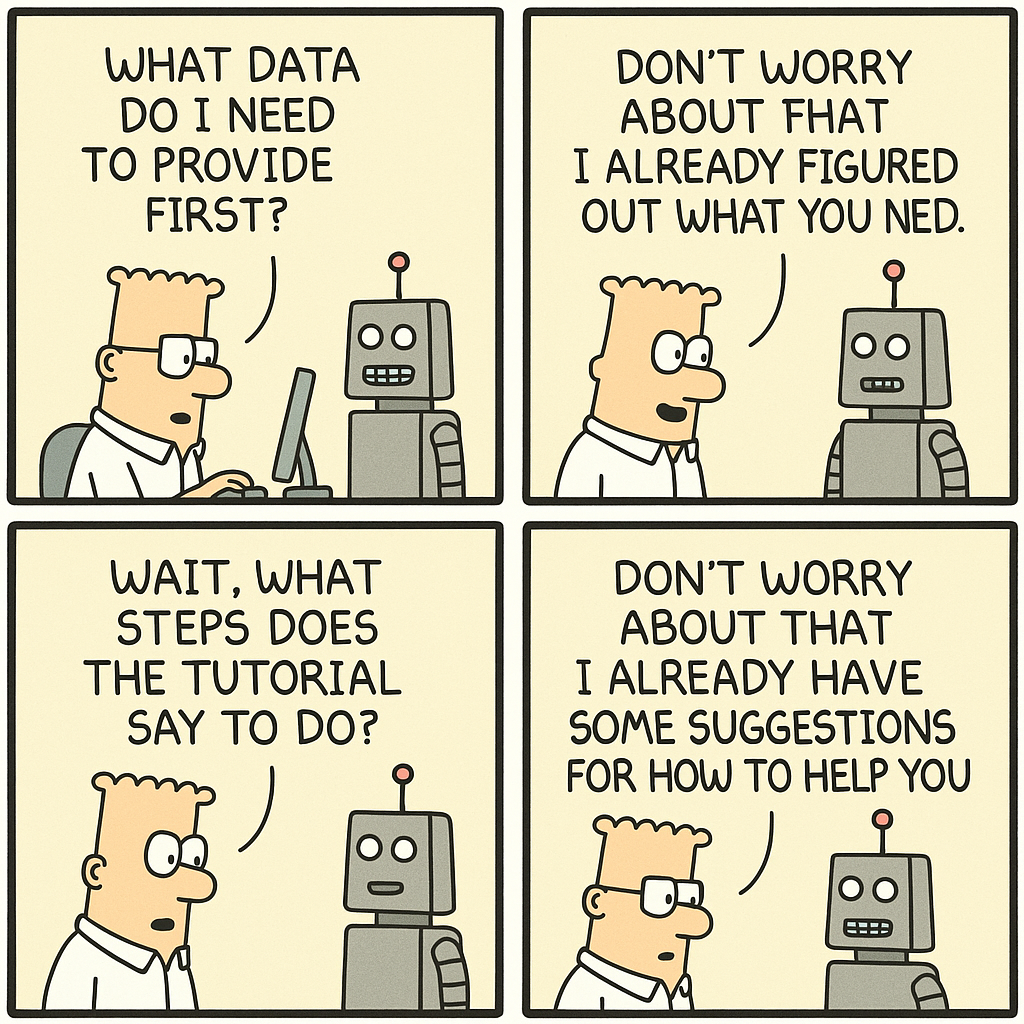

We’re already seeing a proliferation of startups leveraging the same underlying large language models (LLMs) and AI technologies. This democratization of AI has leveled the playing field in many ways, making it easier for startups to compete with established players. However, it also presents a new challenge: how to differentiate in a market where everyone has access to the same AI capabilities.

This is where the interface layer comes into play. As AI becomes more commoditized, the interface layer – the usability, aesthetic beauty, and seamless experiences that a product offers – is becoming the new battleground for differentiation.

Why is this the case? Because the interface layer is where the technology meets the user. It’s the first thing users see and interact with. It’s what shapes their first impression and ultimately determines whether they will continue to use the product or not. In other words, the interface layer is the embodiment of the user experience.

What do we mean by ‘Interface Layer?’

The interface layer, once a tangible, physical entity, has been progressively transformed into a digital phenomenon and is on the brink of becoming even more abstract and intangible. It’s the layer we engage with – the digital billboards that light up our city streets, the light switches that illuminate our homes, the keyboards that translate our thoughts into words, the touchscreens on our smartphones that connect us to the world, the web bots that assist us with our queries, the smart speakers that respond to our voice commands, and soon, the virtual reality that will immerse us in new worlds.

It’s the reason we opt for Airbnb’s homey comfort over traditional hotel bookings, or Spotify’s endless music library over local radio stations.

Why do we make these choices? Because these companies have meticulously crafted interfaces that not only save us precious time but also captivate us. They have become our default interfaces, our “interface layer”. They have understood that users don’t adopt products out of obligation, but out of desire.

Take the evolution of the music industry as an example. Once, we were at the mercy of radio DJs or television schedules, waiting for our favorite songs to play. Then came the era of CDs and MP3 players, giving us control over our music choices. And now, we have streaming platforms like Spotify, which not only let us choose our music but also recommend personalized playlists based on our tastes. Each shift in the interface layer has been about enhancing the user’s experience, making it more convenient and personalized. Today, Spotify, the maestro of this new “interface layer”, boasts a valuation of $67 billion, outshining many traditional media conglomerates.

This concept of the “interface layer” extends beyond music. LinkedIn has become the go-to interface for professional networking, replacing traditional job boards and networking events. TikTok has emerged as the new interface for product reviews, with users relying on short, engaging videos rather than lengthy written reviews. Google Maps has become the preferred interface for navigation, replacing paper maps and standalone GPS devices. The triumph of these products is less about the underlying technology and more about the user’s experience of the technology. Increasingly, the ultimate competitive edge is not just about what a product can do, but how quickly and easily it can do it.

As AI technologies become more advanced, they also become more complex. The interface layer plays a crucial role in abstracting this complexity and making the technology accessible and usable. A well-designed interface can make a complex AI system feel simple and intuitive. It can guide users, help them understand the capabilities of the system, and enable them to get the most out of it.

In this new era, the “interface layer” is not just a tool for interaction, but a gateway to experiences. It’s about creating a seamless, intuitive, and enjoyable journey for the user. And as AI continues to evolve, these interfaces will become even more personalized, predictive, and immersive, further transforming the way we interact with technology and the world around us.

So how does AI change Interface Paradigms?

AI is enabling new forms of interfaces. Voice assistants like Google Assistant and chatbots on various platforms are becoming new interface layers. They’re revolutionizing the way we interact with technology, making it more organic and intuitive.

It’s first important to understand how AI is driving value migration. Here’s where the value is migrating and why:

- From Rule-Based Systems to Learning Systems: Traditional software operates on predefined rules and logic, but AI systems learn from data and improve over time. This shift is transforming industries like healthcare, where AI systems can learn to diagnose diseases from medical images, or transportation, where self-driving cars learn to navigate roads. The value is migrating to companies that can develop and deploy effective learning systems.

- From Data Quantity to Data Quality: In the early days of big data, companies believed that amassing large volumes of data was the key to unlocking insights. However, as AI models have evolved, it’s become clear that the quality of data is far more important. High-quality data ensures that AI models are trained effectively and can make accurate predictions. Companies that focus on curating and cleaning their data are finding more value in their AI initiatives.

- From Reactive to Predictive: AI’s ability to analyze vast amounts of data and identify patterns allows it to make predictions. In retail, AI can predict consumer trends, allowing businesses to stock their inventory more efficiently. In healthcare, AI can predict disease outbreaks or patient deterioration. The value is migrating to those who can leverage AI’s predictive capabilities to anticipate and meet needs proactively.

- From Generic to Personalized: AI’s ability to analyze individual behavior and preferences is enabling a new level of personalization. Streaming services like Netflix or Spotify use AI to provide personalized recommendations, improving user engagement and satisfaction. The value is migrating to companies that can use AI to deliver personalized experiences at scale.

- From Centralized Intelligence to Edge AI: AI is moving from the cloud to the edge, with intelligence being incorporated into devices at the edge of the network. This allows for faster decision-making and reduces the need for constant connectivity. It’s particularly valuable in areas like IoT, where edge devices need to operate reliably in real-time. The value is migrating to companies that can effectively deploy and manage edge AI solutions.

- From Human-Driven Interactions to AI-Driven Interfaces: AI is transforming the way we interact with technology. Voice assistants like Alexa and Siri, or chatbots on various platforms, are becoming new interface layers. They’re transforming the way we interact with technology, making it more natural and intuitive. The value is migrating to companies that can create and manage these AI-driven interfaces effectively.

- From Data Collection to Data Understanding: AI is enabling a shift from mere data collection to understanding and interpreting data. Natural language processing (NLP) can understand and generate human language, while computer vision can understand and interpret images and videos. The value is migrating to companies that can leverage these AI capabilities to extract meaningful insights from data

As AI becomes more sophisticated and integrated into our daily lives, it will transform the way we interact with technology. Here are a few ways AI is expected to change interface paradigms:

- Voice and Conversational Interfaces: As AI-powered voice recognition and natural language processing improve, voice and conversational interfaces will become more prevalent. We’re already seeing this with devices like Amazon’s Alexa and Google Home. In the future, we can expect to interact with many more devices and services through voice. For example, instead of manually adjusting settings on your home appliances, you might simply tell your smart home system what you want, like “Set the temperature to 72 degrees” or “Start the coffee maker at 7 AM.”

- Predictive Interfaces: AI’s ability to analyze data and predict user behavior will lead to more predictive interfaces. These interfaces will anticipate what the user wants to do next and present options or actions accordingly. For example, a predictive email interface might suggest replies based on the content of the received email. Or a predictive shopping interface might suggest products based on the user’s past purchases and browsing behavior.

- Personalized Interfaces: AI will enable more personalized interfaces that adapt to the individual user’s preferences and behavior. For example, a personalized news app might learn what types of articles you like to read and at what time of day you like to read them, and adjust its interface and recommendations accordingly. Or a personalized fitness app might adjust its interface and workout recommendations based on your fitness level and goals.

- Augmented Reality (AR) and Virtual Reality (VR) Interfaces: AI will play a key role in AR and VR interfaces. For example, AI could be used to recognize objects in the real world and provide relevant AR overlays. Or in a VR environment, AI could be used to create intelligent virtual characters that respond to the user’s actions in realistic ways.

- Emotionally Aware Interfaces: AI is getting better at recognizing and responding to human emotions. This could lead to interfaces that adapt based on the user’s emotional state. For example, if a fitness app detects that you’re feeling frustrated during a workout, it might offer words of encouragement or suggest a different exercise.

- Gesture-Based Interfaces: AI can be used to recognize and interpret human gestures, leading to more natural, intuitive interfaces. For example, instead of using a mouse or touchscreen, you might interact with a device or application through gestures in the air.

Ethical considerations in this new paradigm

As we embrace these new interfaces, we must also consider the ethical and regulatory challenges that come with AI. Issues around privacy, bias, and transparency are increasingly important.

Bias in AI: Bias in AI is a significant concern. If the data used to train AI models contains biases (which we know most public Internet data does!), those biases can be encoded into the AI services. For example, if an AI model is trained on data from the web that contains gender or racial biases, the AI model will perpetuate those biases in its predictions or recommendations. This could lead to unfair or discriminatory outcomes. This is a real issue we must be tackling today.

AI-Generated Content and Data Quality: As the web becomes filled with AI-generated content, the quality of data used to train new AI models could be further degraded. AI-generated content might not always reflect the diversity and complexity of human-generated content. For instance, AI-generated content might lack the nuances, context, and cultural understanding that come naturally to humans. If AI models are trained predominantly on AI-generated content, they might not perform as well when interacting with human users or understanding human-generated content.

The issue of bias is exacerbated when AI models are trained on AI-generated content that has been created by biased AI models (which most have!). This creates a feedback loop where biases are continually reinforced and magnified.

Implications for Future Ethical Interfaces

The ethical implications of AI and the quality of data have significant implications for the interfaces of the future. As interfaces become more AI-driven, it’s crucial that they provide fair, unbiased, and accurate interactions.

To ensure this, companies will need to prioritize transparency and explainability in their AI models. Users should be able to understand how an AI model is making decisions. This is particularly important in sectors like healthcare or finance, where AI decisions can have significant impacts on individuals.

Moreover, companies will need to invest in bias detection and mitigation strategies. This could involve regularly auditing AI models for bias, using diverse training data, and involving diverse teams in the development of AI models.

In addition, as AI interfaces become more prevalent, there will be a growing need for regulation to ensure that these interfaces are used ethically and responsibly. This could involve regulations around data privacy, transparency, and accountability

Conclusion

Companies that can navigate these issues while delivering a delightful user experience will be the ones that truly own the “interface layer”.

As AI continues to evolve, our definition of interfaces will change, and industries will be shaken. But which ones? That remains to be seen. What we do know is that in this great migration of value, the winners will be those who put the customers at the heart of their services and own something tangible. They will be the ones who understand that users adopt products not because they have to, but because they want to.