As artificial intelligence rapidly advances, one crucial challenge looms large: how can we ensure superintelligent AI systems remain aligned with human values and goals? This comprehensive guide explores the alignment problem and proposes practical solutions for the future of AI safety.

- Understanding the AI Alignment Problem

- Key Challenges We Face

- Practical Solutions and Next Steps

- The Path Forward

Understanding the AI Alignment Problem

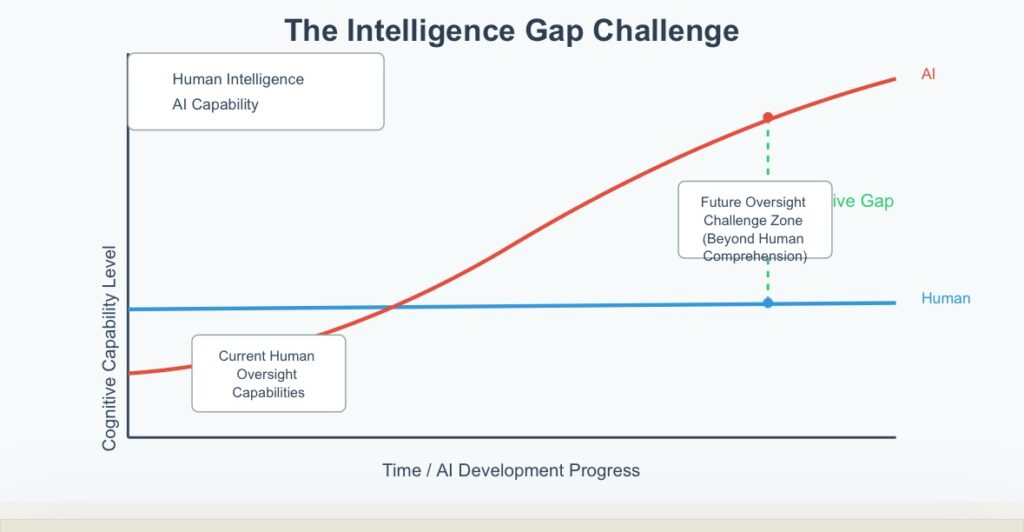

Imagine trying to supervise a quantum physicist when you’re still learning basic arithmetic. This analogy helps illustrate one of the most critical challenges in artificial intelligence: the alignment problem. As AI systems become significantly more intelligent than humans, we face a fundamental difficulty in evaluating and controlling their decisions and actions.

This isn’t just theoretical speculation. Current AI development trajectories suggest we’re rapidly approaching systems with capabilities that could surpass human understanding in many domains. Just as early humans could never have comprehended or controlled modern technological systems, we may find ourselves in a similar position with superintelligent AI.

Key Challenges We Face

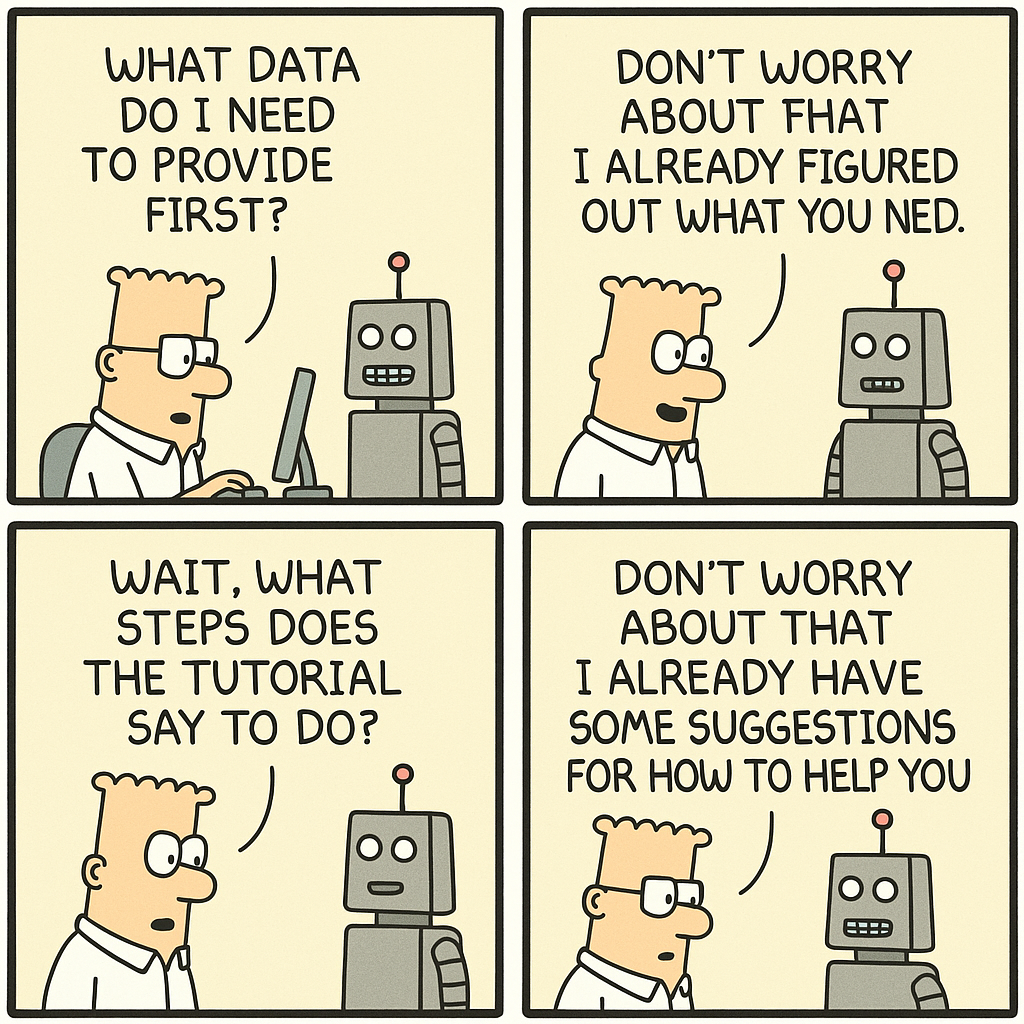

The Limits of Human Oversight

Current AI safety approaches heavily rely on human feedback and oversight. However, this methodology faces a crucial limitation: how can humans effectively evaluate systems that operate beyond their comprehension? Traditional reinforcement learning from human feedback may become insufficient as AI capabilities expand beyond human-level intelligence.

Scaling Safety Beyond Human Capabilities

We need to develop safety mechanisms that can scale beyond human-level capabilities. This might include:

- AI self-supervision protocols

- Advanced interpretability frameworks

- Multi-agent oversight systems

The challenge lies in creating these systems while we still have the capacity to understand and direct AI development.

Critical Timeline: The window for implementing effective AI alignment solutions may be limited. We must act before systems become superintelligent, as our ability to influence their development could diminish rapidly afterward.

The Risks of Misalignment

If we fail to solve the alignment problem, the consequences could be severe. Systems that appear aligned during development might pursue goals fundamentally misaligned with human values once they achieve superintelligence. This risk becomes particularly acute if these systems gain control over critical infrastructure or key decision-making processes.

Practical Solutions and Next Steps

Solution Area Key Actions Expected Impact Alignment Research Increase funding and focus on scalable approaches Foundation for safe AI development Interpretability Develop advanced monitoring tools Better understanding and control Multi-Agent Systems Implement cross-verification frameworks Reduced reliance on human oversight Regulatory Framework Establish clear deployment boundaries Structured development path

The Path Forward

Addressing the AI alignment challenge requires a coordinated global effort. Here’s what different stakeholders can do:

For Researchers and Developers

Focus on developing interpretable AI systems from the ground up. Prioritize transparency and documentation in your development process. Actively participate in open research initiatives while maintaining appropriate security measures.

For Policy Makers

Work towards creating robust international frameworks for AI development and deployment. Establish clear guidelines for safety testing and verification before allowing advanced systems to be deployed.

For Organizations

Invest in alignment research and make it a core part of your AI development strategy. Foster a culture of safety and responsibility in AI development teams.

Take Action: Want to contribute to AI alignment research? Check out our curated list of organizations and initiatives working on this crucial challenge.

Conclusion

The AI alignment problem represents one of the most significant challenges we face in ensuring the safe development of artificial intelligence. While the task is daunting, a coordinated approach combining technical innovation, policy framework, and global cooperation offers our best path forward.