In the rush to innovate, healthcare organizations are investing heavily in artificial intelligence solutions. From revenue cycle optimization to clinical workflow enhancement, the promise of AI draws significant attention and resources. Yet a concerning pattern emerges: impressive proofs of concept (PoCs) that never make it to production deployment.

The Core Challenge

At its heart, this gap between demo and deployment reveals a fundamental misunderstanding. A successful PoC proves technical feasibility but says little about real-world viability. Healthcare environments present unique complexities that controlled demonstrations simply cannot capture. Legacy systems, variable data quality, intricate workflows, and stringent regulations create a challenging landscape that requires more than technical excellence.

A PoC is not a commercial solution. A model that functions well in a test environment does not guarantee success when deployed at scale. The transition from a promising PoC to a fully integrated, regulatory-compliant, and clinically valuable AI system requires careful planning across multiple dimensions.

Why Many Healthcare AI Projects Get Stuck at PoC

Artificial intelligence in healthcare faces challenges that are often underestimated in the early stages of development. The following barriers frequently prevent AI from moving beyond the proof-of-concept phase.

Lack of Clear Business and Clinical Value Validation

AI is not valuable just because it is sophisticated or technically impressive. The real measure of success is whether it delivers tangible healthcare outcomes, operational efficiency, and return on investment.

Many AI projects fail to define clear success metrics before development begins. Whether an AI model is designed to optimize patient scheduling, automate claims processing, or support clinical decision-making, the question should always be: Does this AI system measurably improve patient care, reduce administrative burden, or drive financial value?

Without predefined metrics such as reduced processing time, increased accuracy, or improved clinical outcomes, there is no way to determine whether an AI model is truly making a difference.

Failure to Address Real-World Healthcare Complexity

A proof-of-concept often works in isolation with clean, well-structured data, but the real world of healthcare is much messier. AI solutions must be robust enough to handle:

• Integration with legacy systems. Many healthcare organizations still rely on outdated electronic health record systems, custom-built infrastructure, and siloed data repositories. AI models must work within these constraints.

• Variable data quality. Clinical data is often incomplete, inconsistent, and unstructured. A PoC trained on carefully curated datasets may not function effectively when faced with real-world patient records.

• Evolving workflows. Healthcare operations are constantly changing due to regulatory updates, reimbursement policy shifts, and new care delivery models. AI systems must be adaptable to these shifts rather than becoming obsolete.

Inadequate Compliance, Privacy, and Security Protections

AI models in healthcare are not just processing generic data. They are handling protected health information, financial records, and sensitive patient details. Every AI initiative, whether focused on claims processing, diagnostic support, or patient communication, must be designed from the ground up with HIPAA compliance, data security, and auditability in mind.

Without rigorous oversight, an AI model may introduce unintentional biases, security risks, or regulatory violations that could compromise patient safety and organizational reputation. Privacy and compliance must be embedded into the development process rather than treated as an afterthought.

No AI Lifecycle Management Strategy

A PoC may demonstrate high accuracy when initially developed, but AI models degrade over time. If there is no plan for ongoing monitoring, validation, and retraining, performance will decline as real-world data shifts.

A robust AI strategy requires:

• Continuous model monitoring to detect accuracy drift and biases.

• A governance framework that defines when and how retraining occurs.

• Human oversight to ensure the AI system remains clinically valid and safe.

If there is no plan for long-term AI maintenance, the system will eventually become unreliable, leading to wasted investment and potential patient harm.

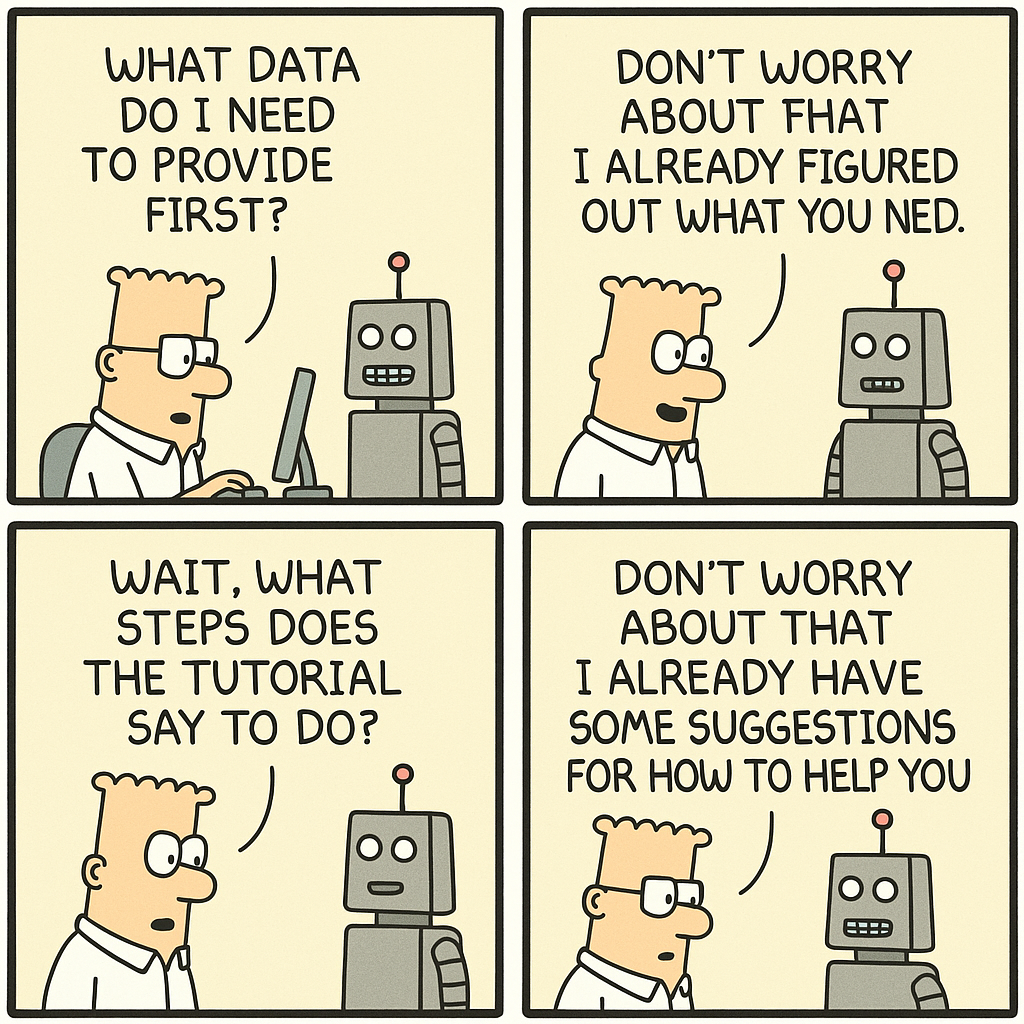

Failure to Drive Adoption Among Healthcare Stakeholders

The best AI system is useless if clinicians, administrators, or operational teams do not trust or use it. Many AI initiatives fail not because the technology does not work, but because stakeholders were not involved early enough in the process.

Adoption requires more than technical performance. It depends on:

• Clinician trust. If doctors and nurses do not believe an AI model provides accurate, explainable recommendations, they will reject it.

• Seamless workflow integration. AI should enhance, not disrupt, existing clinical and administrative workflows.

• Comprehensive training and support. Teams must understand not only how to use AI, but also when and why they should rely on it.

If AI adoption is treated as an afterthought, even the most advanced solutions will fail to drive meaningful impact.

How to Ensure a PoC Transitions to a Real-World AI Solution

Before moving forward with a healthcare AI PoC, teams should address the following questions:

Define Success Criteria Beyond the Demo

• Does the AI initiative have clear business and clinical value metrics?

• Are we testing performance against real-world benchmarks, not just idealized datasets?

• Have we identified key stakeholders who will ultimately use the AI solution?

Plan for Real-World Integration from Day One

• Does the AI model work with live, real-time patient data, not just static test sets?

• Can it integrate with existing healthcare IT systems and workflows?

• How will it handle missing, incomplete, or unstructured clinical data?

Ensure Compliance, Bias Mitigation, and Security

• Are HIPAA, FDA, and other regulatory requirements fully addressed?

• Have we implemented bias detection and mitigation protocols?

• Does the AI system have explainability and transparency mechanisms for users?

Establish a Long-Term AI Lifecycle Management Plan

• How will the AI model be monitored for accuracy drift and reliability?

• What is the process for ongoing retraining and continuous improvement?

• Who owns the governance and oversight of AI decision-making?

Develop a Comprehensive Change Management Strategy

• How will we ensure clinicians and operational staff trust and adopt the AI?

• What training, documentation, and feedback loops will support adoption?

• How will AI outputs be integrated into existing decision-making processes?

Conclusion: AI in Healthcare Must Be Built for the Long Game

A PoC proves that something is technically possible, but a real AI strategy ensures clinical and operational impact. Too many healthcare AI initiatives stall because they focus on developing a functional model without planning for governance, compliance, integration, and adoption.

Healthcare AI is not about cool technology. It is about delivering measurable improvements in patient care, efficiency, and cost reduction. The organizations that succeed in AI will be the ones that move beyond the PoC mindset and focus on long-term, sustainable, and trustworthy AI deployment.

What are the biggest challenges you’ve seen in moving healthcare AI from PoC to real-world implementation? Share your insights in the comments.